01 MARROW: TEACH ME HOW TO SEE YOU MOTHER

2020, Montreal Canada.

Developed in collaboration with Avner Peled.

Co-production of the National Film Board of Canada and AtlasV.

Sound by Philippe Lambert.

Animation by Paloma Dawkins.

Co-production of the National Film Board of Canada and AtlasV.

Sound by Philippe Lambert.

Animation by Paloma Dawkins.

If machines have mental capacities – do they also have the capacity of mental disorders?

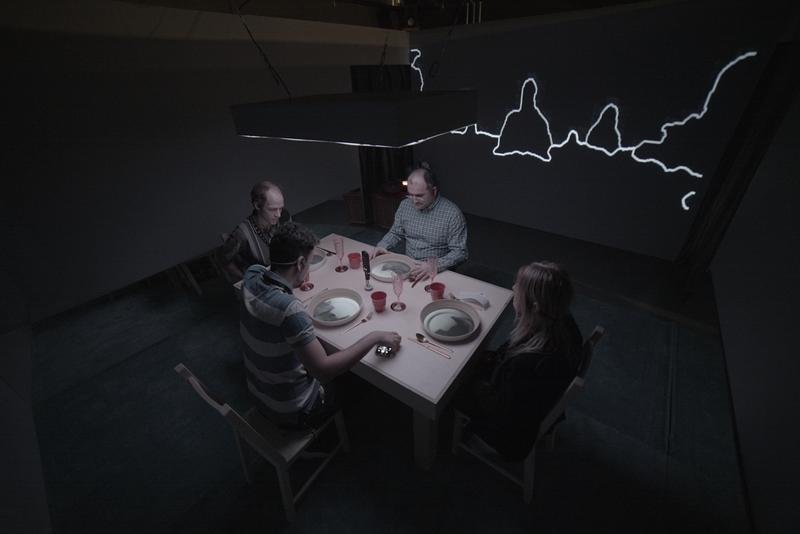

An interactive theater piece where the participants play the role of machine learning models in a family dinner setting.

The project invites participants to play in a scripted scene, surrounded by a world conceived and manipulated by machine learning models. Those AIs fervently attempt to reconstruct what they were trained to recognize as a perfect family dinner. But the machine models are flawed and vulnerable, just like us humans who created them. From technical bugs and glitches found in the algorithms, a scene of a dysfunctional family emerges.

Marrow refers to the soft, fatty tissue in the interior of bones. It is also used to describe the innermost, essential part of something. This experience looks into the innerworks of both machines and humans. Using a family setting, the project explores the training environments for humans and machines– systems that shape behaviors. Through humor and play, we wish to surface some of the serious problems that can occur with AIs, and reflect them back to us as a society.

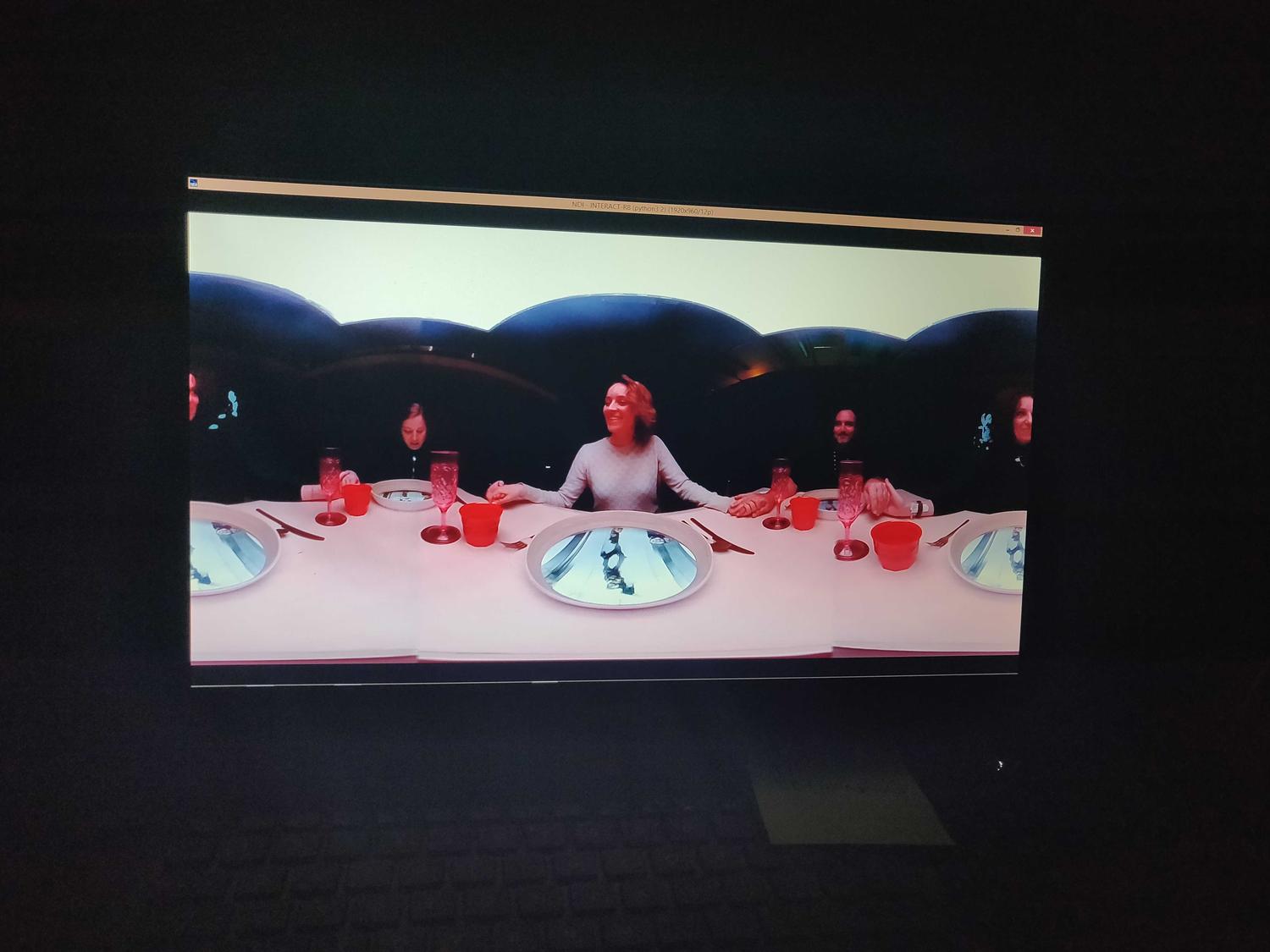

The experience is for four people at a time, invited to gather around a dinner table and act as a family. Before sitting at the table, each participant receives a random role of one of the family members: Mother, Father, Brother, or Sister. As the participants introduce their characters, we record and clone their voices. Once seated, the dinner plates start to project a script. The text on the plates, like machine code, drives the experience forward; the participants are forced to act by it. As the story progresses, the memories, emotions, fears, and hopes of this family are revealed. Interactive visuals and audio surround the room. At the same time, bone conduction earphones worn by the participants play inner thoughts of their AI personas in their own voice. Gradually, the emotional gap between the family members expands. They each experience a different side of the same story.

The disorders presented in the story are inspired by the GAN generative model. GAN is prone to a failure state known as non-convergence: An endless state of oscillation where the internal parts of the model, defined as the discriminator and generator, are battling their perceptions of reality, shifting from innovation to stagnation and from the real to the fake. As the room and story unravel, the level of complexity rises, and the disorders of GAN begin to surface. The participants become slowly immersed in a fantastical environment where the barriers between inner and outside worlds, humans and machines, and real and fake are dissolving. What was meant to be a pleasant and mundane family dinner evolves into a simulation of an intimate universe where you can never guess what happens next.

During the dvelopment we published creative tools: Small dataset preperation, collaborative tool when working on GAN latent space and generating images in real time using 360 camera and GuaGAN.

An interactive theater piece where the participants play the role of machine learning models in a family dinner setting.

The project invites participants to play in a scripted scene, surrounded by a world conceived and manipulated by machine learning models. Those AIs fervently attempt to reconstruct what they were trained to recognize as a perfect family dinner. But the machine models are flawed and vulnerable, just like us humans who created them. From technical bugs and glitches found in the algorithms, a scene of a dysfunctional family emerges.

Marrow refers to the soft, fatty tissue in the interior of bones. It is also used to describe the innermost, essential part of something. This experience looks into the innerworks of both machines and humans. Using a family setting, the project explores the training environments for humans and machines– systems that shape behaviors. Through humor and play, we wish to surface some of the serious problems that can occur with AIs, and reflect them back to us as a society.

The experience is for four people at a time, invited to gather around a dinner table and act as a family. Before sitting at the table, each participant receives a random role of one of the family members: Mother, Father, Brother, or Sister. As the participants introduce their characters, we record and clone their voices. Once seated, the dinner plates start to project a script. The text on the plates, like machine code, drives the experience forward; the participants are forced to act by it. As the story progresses, the memories, emotions, fears, and hopes of this family are revealed. Interactive visuals and audio surround the room. At the same time, bone conduction earphones worn by the participants play inner thoughts of their AI personas in their own voice. Gradually, the emotional gap between the family members expands. They each experience a different side of the same story.

The disorders presented in the story are inspired by the GAN generative model. GAN is prone to a failure state known as non-convergence: An endless state of oscillation where the internal parts of the model, defined as the discriminator and generator, are battling their perceptions of reality, shifting from innovation to stagnation and from the real to the fake. As the room and story unravel, the level of complexity rises, and the disorders of GAN begin to surface. The participants become slowly immersed in a fantastical environment where the barriers between inner and outside worlds, humans and machines, and real and fake are dissolving. What was meant to be a pleasant and mundane family dinner evolves into a simulation of an intimate universe where you can never guess what happens next.

During the dvelopment we published creative tools: Small dataset preperation, collaborative tool when working on GAN latent space and generating images in real time using 360 camera and GuaGAN.